News

Vol.83 June

- Achieved self-reliance in GaN semiconductor transmit/receive chips, which were previously dependent on imports

- Enables development and mass production of high-performance, high-efficiency chips, driving defense technology

Korean researchers have developed localization technology for Gallium Nitride (GaN)1) monolithic microwave integrated circuits (MMIC2)s) used in transmit/receive modules for military radars and satellites. This achievement is expected to significantly contribute to defense technology self-reliance by enabling the localization of key components not only for military radars but also for high-resolution Synthetic Aperture Radar (SAR)3) systems.

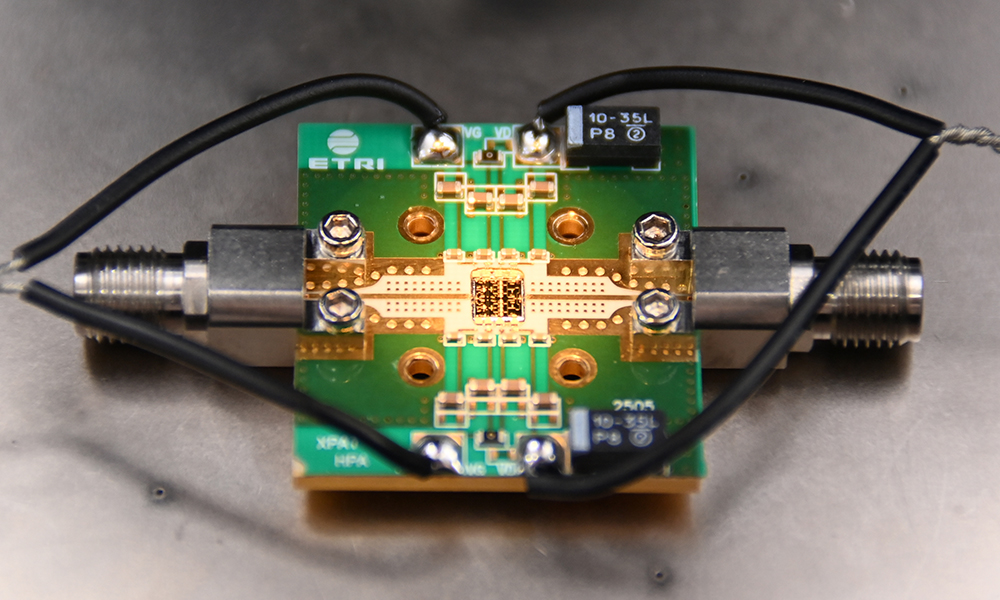

Electronics and Telecommunications Research Institute (ETRI), in collaboration with Wavice, has developed GaN-based MMICs for transmit/receive operations used in military and SAR radars for the first time in Korea using fab-based technology.

Previously fully dependent on imports, these high-performance military semiconductor components are now developed with domestic technology, and a foundation for mass production at local facilities has been established. This marks a major breakthrough in R&D. The results are expected to bolster national defense autonomy and provide a strong response to export regulations.

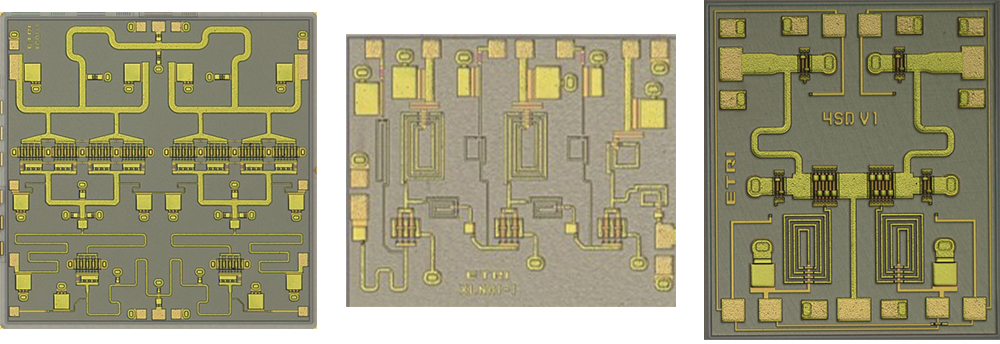

The research was promoted as part of the National Research Council of Science & Technology’s (NST) Creative Allied Project beginning in 2023. Through the integration of ETRI’s semiconductor design technology and Wavice’s manufacturing process expertise, three types of X-band4) transmit/receive chips were successfully developed.

1) Gallium Nitride (GaN): A compound semiconductor with high voltage and power characteristics, suitable for high-frequency and high-power devices used in military radar, power electronics, and communication equipment.

2) MMIC (Monolithic Microwave Integrated Circuit): A high-frequency semiconductor chip integrating both active and passive elements on a single substrate, used in T/R modules.

3) Synthetic Aperture Radar (SAR): A radar system that uses radio waves to form images of terrain and structures, enabling precise ground observation regardless of weather or lighting conditions.

4) X-band: A frequency range of 8–12 GHz widely used in radar and satellite communications, particularly in military and aerospace.

X-band GaN Power Amplifier MMIC (Left)

X-band GaN Power Amplifier MMIC (Left)

The key components developed include ▲ Power Amplifier (PA)5), ▲ Low Noise Amplifier (LNA)6), and ▲ Switch (SW)7) MMICs.

These components demonstrate performance on par with commercial products from leading foundry nations such as the U.S. and Europe, and are the first results utilizing Korea’s only GaN mass production fab facilities.

Compared to conventional Gallium Arsenide (GaAs)8)-based products, developed GaN MMICs offer higher output and efficiency, which is expected to dramatically improve the performance of military and satellite communication, especially Active Electronically Scanned Array (AESA) radars.9)

AESA radar is a state-of-the-art radar technology capable of electronically steering beams to rapidly detect and track targets. It comprises an antenna with multiple transmit/receive modules. Each module integrates a power amplifier to boost transmission signals, a low-noise amplifier to receive signals cleanly, and a switch to toggle between transmit and receive modes.

SAR systems also adopt similar transmit/receive module architectures. GaN semiconductors, with their high power and efficiency, greatly contribute to miniaturization and performance enhancement of such devices.

Therefore, the components developed in this research are expected to significantly improve the performance of X-band military, maritime, and satellite communication radars in Korea and aid in achieving technological self-reliance.

5) Power Amplifier (PA): A critical component that amplifies transmit signals to antenna level, essential to radar and communication system performance.

6) Low Noise Amplifier (LNA): A circuit that amplifies weak incoming signals while minimizing noise, crucial for reception quality.

7) Switch (SW): A component that rapidly toggles signal paths between transmission and reception in high-frequency circuits.

8) Gallium Arsenide (GaAs): A compound traditionally used in high-frequency semiconductors, but with lower output and efficiency than GaN.

9) AESA (Active Electronically Scanned Array) Radar: An advanced radar system capable of electronically steering beams using an antenna array composed of hundreds of T/R modules.

Wavice GaN RF Semiconductor Mass Production Fab Facility

Wavice GaN RF Semiconductor Mass Production Fab Facility

Since 2020, ETRI has accumulated foundational research results on GaN semiconductor technology through its DMC Convergence Research Department. This recent achievement is a follow-up effort conducted in collaboration with Wavice to link it with domestic mass production capabilities.

Dr. Jong-Won Lim, from ETRI’s RF/Power Components Research Section, stated, “By combining ETRI’s design technology with Wavice’s processing technology, we developed three types of high-performance T/R chips for the first time in Korea. We hope this technology contributes to the localization of radar and satellite components.”

Wavice CTO Yun-Ho Choi added, “With the domestic infrastructure capable of mass-producing GaN semiconductors, we have laid the foundation for the self-reliance of critical defense components. This will greatly support the stable development of such systems.”

The research team plans to accelerate the localization of military semiconductor components that have long depended on foreign products. They have completed the transfer of design technology to Wavice and are preparing for full-scale commercialization.

They have successfully localized GaN-based MMICs for AESA radar T/R modules, including power amplifiers, low-noise amplifiers, and switches, and even developed a single-chip MMIC integrating all these functions.

This achievement is part of the NST project titled “Advanced Technology Development and Mass Production for Semiconductor Components in Defense for AESA Radar and SAR Satellites using Domestic Foundry.” It represents a valuable convergence research result that builds upon ETRI’s prior work and advances the technological maturity of GaN semiconductor technology for defense applications using Wavice’s foundry capabilities.

Jong-Won Lim, Principal Researcher

RF/Power Components Research Section

(+82-42-860-6229, jwlim@etri.re.kr)

- Won 2nd place in the ICRA 2025 Challenge, the world’s most prestigious robotics conference, proving its technological prowess internationally

- AI technology for recognizing unstructured terrain...expected to be utilized for autonomous driving and disaster robots

Goose 2D Semantic Segmentation Challenge Finalist Results

Goose 2D Semantic Segmentation Challenge Finalist Results

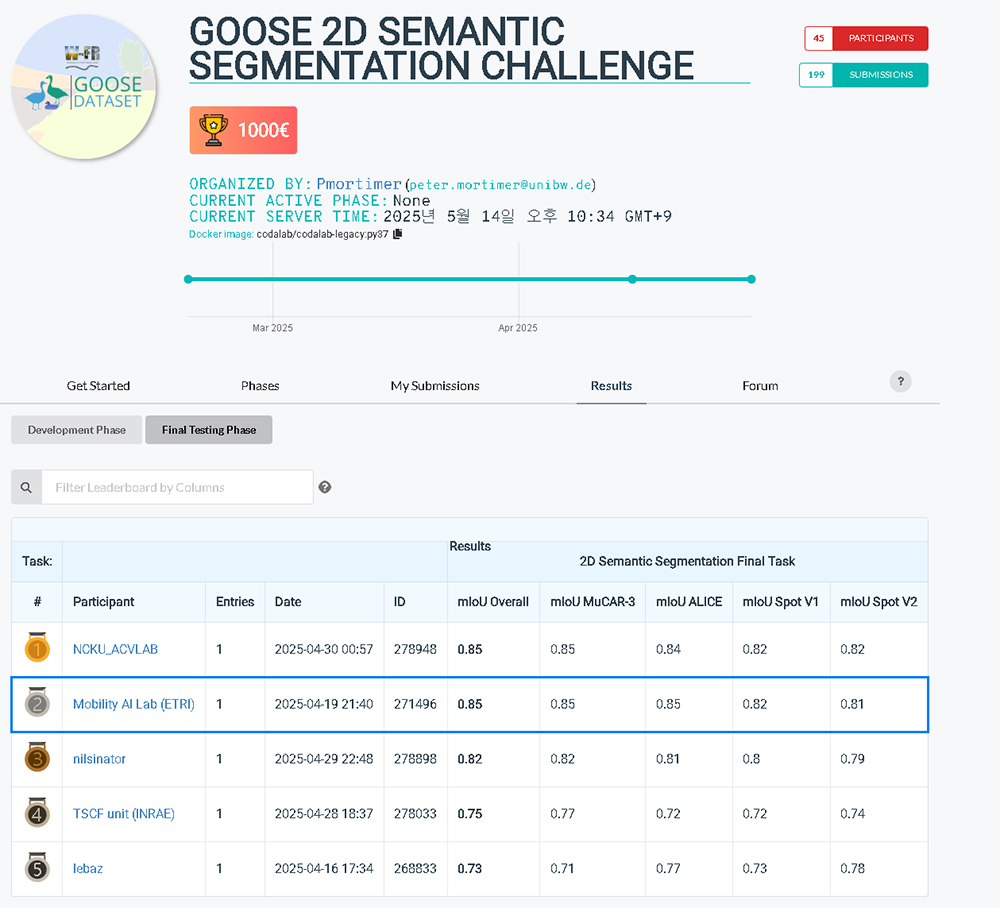

Korean researchers won second place at the Semantic Segmentation1) International Challenge at ICRA2) 2025, the world’s most prestigious robotics conference. This raises expectations for the researchers’ performance in the field of future robot eyes that see through images.

Electronics and Telecommunications Research Institute (ETRI) has announced that it participated in the Goose 2D Semantic Segmentation Challenge3) at the Field Robotics4) Workshop of ICRA 2025 and achieved excellent results. This achievement once again proves the international competitiveness of Korea’s AI-based image recognition technology.

1) Semantic segmentation: An artificial intelligence technology that distinguishes ‘what’s where’ on a pixel-by-pixel basis in an image. For example, objects such as “trees,” “ground,” and “stones” are color-coded.

2) ICRA (International Conference on Robotics and Automation): It is the world’s largest robotics conference organized by the Institute of Electrical and Electronics Engineers (IEEE), and is one of the most prestigious international academic events in robotics and artificial intelligence.

3) Goose 2D Semantic Segmentation Challenge: An international AI technology competition held at the ICRA 2025 official workshop, providing a dataset for AI pixel-by-pixel classification of objects in images taken in unstructured natural environments such as fields and forests (https://www.codabench.org/competitions/5743/)

4) Field robotics: A field of robotics technology that operates in unstructured environments outside of urban centers (fields, forests, farmland, construction sites, etc.). It performs autonomous driving and tasks in more complex and unpredictable conditions than typical road environments.

Segmentation result according to input video type

Segmentation result according to input video type

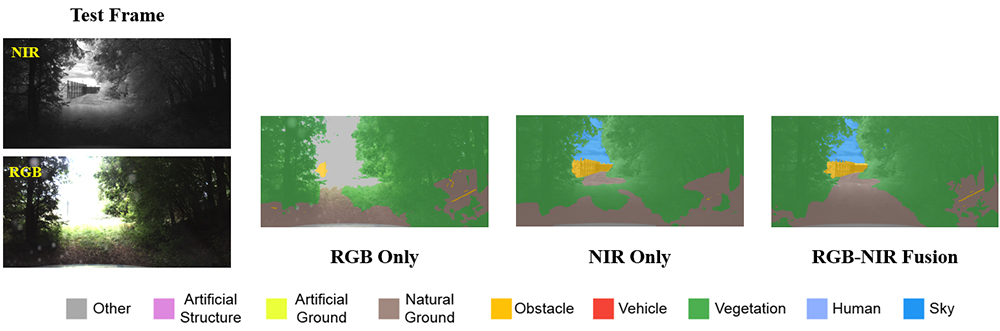

This challenge tests the ability of artificial intelligence technology to accurately classify objects such as bushes, stones, trees, and the ground, pixel by pixel, based on 2D image data captured in unstructured terrain5) such as fields, forests, and construction sites that field robots actually encounter.

Participants develop pre-trained models6) based on limited training data and then compete on the accuracy of their semantic segmentation against an undisclosed test dataset. The higher the mIoU (mean Intersection over Union)7), the better the AI model's ability to recognize objects in unstructured terrain, such as forests and construction sites.

A total of eight teams from South Korea, Germany, and Taiwan participated in the challenge. ETRI participated as a team called the ‘Mobility AI Group’ formed by Principal Researcher Ahn Soo Yong and Postdoctoral Researcher Kim Won Jun from the Mobility AI Convergence Research Section (Director Lee Lae Kyoung) at Daegu-Gyeongbuk Research Division. The researchers implemented an artificial intelligence model that performs well in complex, unstructured outdoor environments, resulting in a second-place finish in the competition.

ETRI’s skill has been recognized internationally for implementing artificial intelligence models that can segment objects with high accuracy in unstructured outdoor environments. This also means that robots have acquired the ability to distinguish and understand objects more clearly from a human perspective. The top place in the challenge went to a team from Taiwan’s National Cheng Kung University.

5) Unstructured terrain: Terrain without a defined structure, such as city streets. Example: Forests, farmland, gravel roads, fields, undeveloped land, etc. Complex environments that are difficult for autonomous driving technology to respond to

6) Pre-trained model: AI models that are pre-trained and can quickly adapt to new tasks. Utilizing pre-trained models is important for this challenge as limited training data is available

7) mIoU (mean Intersection over Union): A representative indicator that numerically evaluates how accurately an AI model segments objects in an image. It calculates how much the predicted area overlaps with the actual correct area and is expressed as a value between 0 and 1, with a higher value indicating better recognition performance of the model.

Original RGB image (left) and segmentation result using the proposed AI model (right)

Original RGB image (left) and segmentation result using the proposed AI model (right)

The dataset provided in this challenge is fundamentally different from traditional city-based semantic segmentation datasets. Unlike typical datasets that consist of structured roads and clear objects, the dataset for this challenge consists of footage captured in unstructured outdoor environments such as forests, fields, and undeveloped areas.

This includes realistic environmental elements such as light variations, irregular structures, and visual obstructions, and the visual similarity between objects is also an important factor in testing the model’s ability to generalize8). These characteristics serve as very high standards for evaluating the technological maturity of practical field robots.

Byun Woo Jin, vice president of the Daegu-Gyeongbuk Research Division, said, “This award is of great significance as it is internationally recognized by the world’s most prestigious robotics society for our technology and research achievements. In particular, image-based semantic segmentation technology can be used for a wide range of mobility applications, including autonomous driving, logistics, and industrial robotics.”

ETRI’s Daegu-Gyeongbuk Research Division focuses on the development of practical technologies that can be applied in the field, and has expressed its ambition to become a research institute that contributes substantially to the domestic and global industrial ecosystem.

Building on this achievement, the researchers are now focusing on advancing autonomous driving9) technology in unstructured terrain. They plan to continue developing robust semantic recognition-based object segmentation technology to enable stable operation in forests, farmland, construction sites, disaster response areas, and other areas where it is difficult to secure recognition accuracy and driving stability with existing autonomous driving technologies. Through this, they are actively promoting the expansion of the technology into various applications such as industrial robots, agricultural robots, and disaster response robots.

The award is seen as more than just a competition achievement, but as a real milestone that provides international validation of the technology’s applicability in a real-world field environment. ETRI will continue to lead innovation in various industries centered on AI-based image cognitive technology.

This achievement was carried out as part of the ‘ICT Convergence Technology Advanced Support Project (Mobility) based on the Daegu-Gyeongbuk Regional Industry’ of the Ministry of Science and ICT.

8) Generalization: The ability of the AI model to perform well with new data that was not used to train it. This is an essential property for maintaining performance in real-world environments.

9) Autonomous mobility: A vehicle or robot that is aware of its environment and moves around on its own without human intervention. It can be applied in urban centers as well as forests, agricultural land, disaster sites, and more.

Lee Laekyoung, Director

Mobility AI Convergence Research Section

(+82-53-670-8045, laeklee@etri.re.kr)

- Next-generation interaction technology designed for autonomous vehicles and digital humans

- Lifelike AI avatars expected to be applied to kiosks, banks, news, and advertisements

Electronics and Telecommunications Research Institute (ETRI) has developed hyper-realistic AI technology that can create an avatar that speaks naturally like a real person using only a single portrait photo.

The technology is being seen as a next-generation interface that enables intuitive interaction between vehicles and humans in preparation for the era of fully autonomous driving, and is expected to spread across the digital human industry1).

While traditional current speech-driven AI assistants in office environments or navigation systems in vehicles are limited to simply carrying out commands, ETRI’s hyper-realistic AI avatars have sophisticated facial expressions and mouth movements that enable natural, human-like conversations.

This allows for a more human-centered human-machine interaction2), such as an in-vehicle AI driver talking to the driver or interacting with pedestrians.

The core of this technology is a unique algorithm that, unlike traditional generative AI, selectively learns and synthesizes parts of the face that are directly related to utterance, such as the lips and chin. This approach reduces unnecessary information learning and allows for more sophisticated facial expressions, including mouth shapes, teeth, and skin wrinkles.

ETRI explained that the technology has demonstrated superior performance in terms of synthetic visual quality3) and lip synchronization4) accuracy as presented at major international conferences such as CVPR5) and AAAI6).

In addition to autonomous vehicles, this technology can be utilized in various industries such as ▲kiosks, ▲bank counters, ▲news presentations, ▲advertising models, and is expected to drive innovation in the AI-based digital human industry.

ETRI’s Mobility User Experience Research Section is currently focusing on human-machine interaction (HMI) technologies, and is also developing AI-based driver interface technologies that analyze driver and pedestrian emotions, fatigue, concentration, etc.

Daesub Yoon, Director of the Mobility User Experience Research Section, said, “As mobility technology becomes more advanced, the elderly and socially disadvantaged may be marginalized. We hope that this AI avatar technology will contribute to improving digital literacy7) and make smart mobility services more accessible to all.”

And Senior Researcher Daewoong Choi also said, “We plan to further advance our generative AI technology so that AI avatars can naturally talk and move like real people. In the future, we’re aiming for interactions that can replace some human labor for ordering, consulting, and more.”

The technology is currently registered on the ETRI Technology Transfer site as ‘A Framework for Photorealistic Talking Face Generation’. The researchers will also actively pursue technology transfer and strategies for commercialization in various industries.

The research was conducted as part of the ‘Next Generation Leading New Research Project’ conducted by Electronics and Telecommunications Research Institute (ETRI) through the task ‘Development of fundamental technology for controllable photo-realistic video generation AI.’

1) Digital human industry: An industry that utilizes artificial intelligence, graphics technology, etc., to create virtual humans (digital humans) that resemble reality and applies them to various fields such as broadcasting, education, healthcare, entertainment, etc.

2) HMI (Human-Machine Interaction): Technology and interfaces that are designed to be intuitive and natural for humans to use when interacting with machines or systems

3) Visual quality: The visual clarity and naturalness of an image or video created using artificial intelligence or graphics technology

4) Lip synchronization: An indicator of how closely a person's lip movements in a video match their speech, and one of the key elements of digital human and image synthesis technology

5) CVPR (Computer Vision and Pattern Recognition): The world’s leading conference in computer vision and pattern recognition, where the latest research results are presented

6) AAAI (Association for the Advancement of Artificial Intelligence): The leading international conference and society in the field of artificial intelligence, promoting the advancement of AI-related technologies and research exchanges

7) Digital literacy: Ability to understand and utilize digital technology and information effectively

Daewoong Choi, Ph.D., Senior Researcher

Mobility User Experience Research Section

(+82-42-860-5585, dwchoi92@etri.re.kr)