ICT TREND

ETRI Developed AI core software,

‘Deep Learning Compiler’

- ETRI disclosed system SW which improves deep learning inference performance,

while the SW is established as a standard.

- A compatibility with AI chips and applications is improved,

while reducing its development cost and period.

A Korean research team has unveiled a core technology which reduces the period and cost invested in developing artificial intelligence (AI) chips in small and medium-sized enterprises and startups. Thanks to the development of system software that solved the problems of compatibility and scalability between hardware and software which have been obstacles in the past, the development of AI chips is also expected to accelerate.

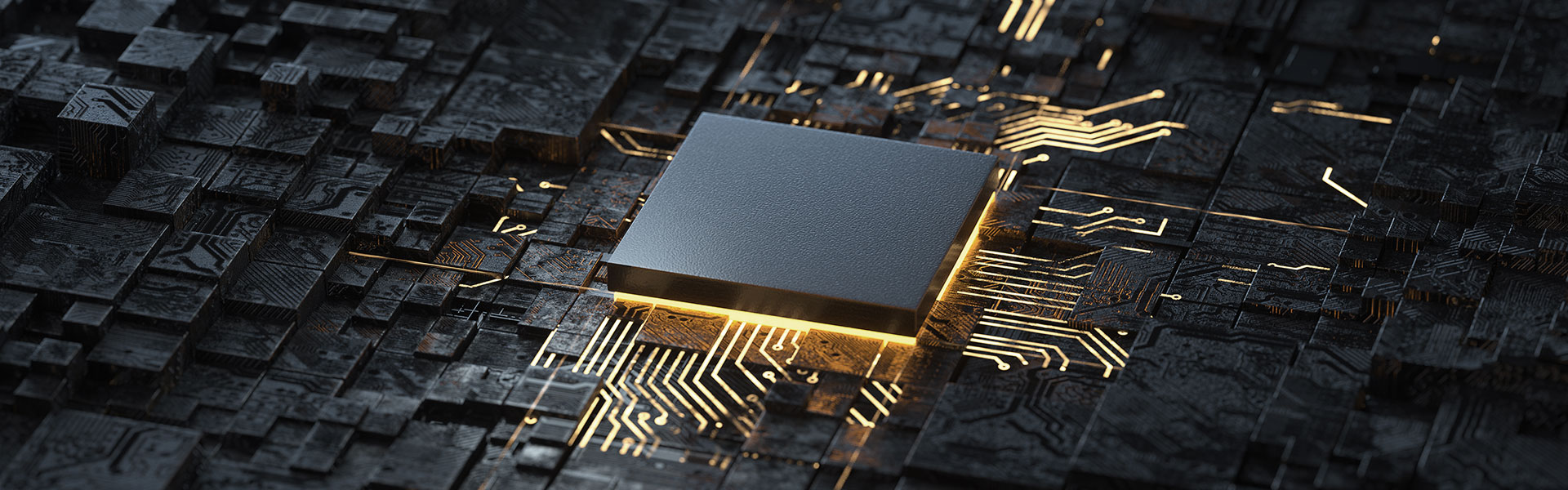

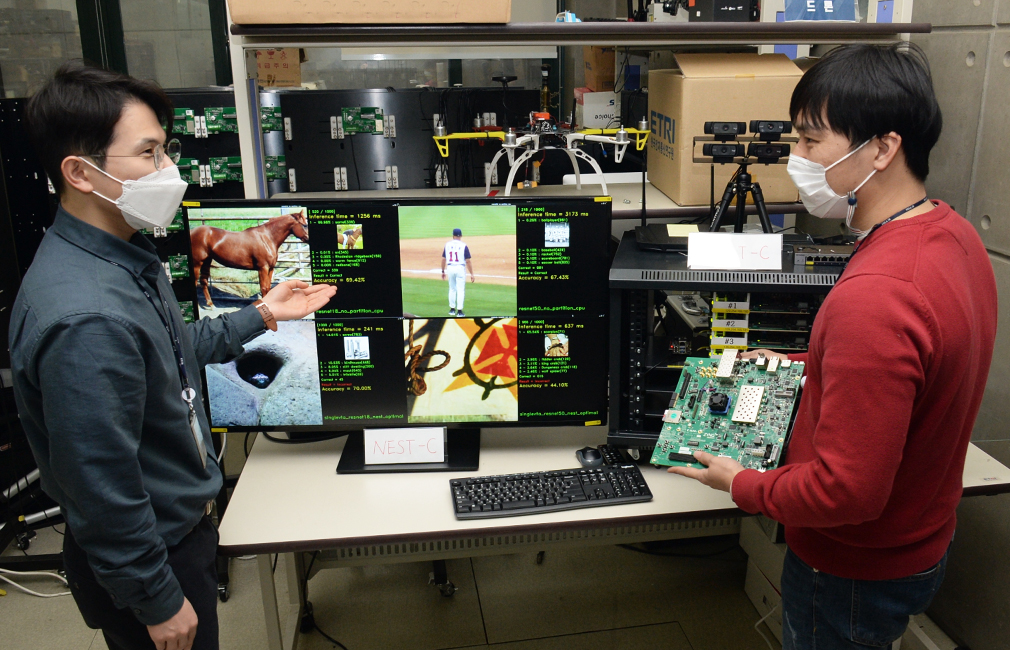

ETRI has developed an AI core software, Deep Learning Compiler NEST-C1). It was released on the web ( Github ) along with the internally developed AI chip hardware so that developers can use it easily.

As AI technology develops, deep learning application services are expanding into various fields. As the AI algorithm to implement this service becomes more complex, the necessity for better and more efficient arithmetic processing has increased.

ETRI resolved this problem by defining a common intermediate representation2) suitable for AI applications to apply it to the nest compiler. By resolving the heterogeneity between AI applications and AI chips, AI chip development becomes easier. This technology was also established as a standard of the Telecommunications Technology Association (TTA)3) .

1) NEST-CAI compiler which receives deep learning models in the format of ONNX, Pytorch, and Caffe2 to generate executable codes targeting NPU, CPU, and GPU.

2) Intermediate RepresentationA unique internal form of representation when the compiler converts executable code into machine code. Intermediate Representation is designed for additional processing, such as optimization and transformation.

3) the Telecommunications Technology AssociationTTAK.KO.11.0290, Intermediate Representation for Efficient Neural Network Inference in the embedded system with neural network accelerator.

Instead of CPU or GPU, Neural Processing Unit (NPU) AI chip specializing in AI computation processing is drawing more attention. In order to run applications such as autonomous driving, Internet of Things (IoT), and sensors, optimized AI chips to each application must be designed.

At the same time, an optimized compiler4) must produce accurate execution code for each application to achieve optimal performance. It is because Deep Learning Compiler, core system software5) that guarantees the inference performance of deep learning models, is important. It acts as a bridge between hardware and application software.

In general, manufacturers develop this tool along with AI chip and system software for sale. So far, SMEs and Startups have difficulty in focusing their capabilities on chip design. It is because a considerable amount of time needs to be devoted to developing and optimizing system software and application. System software supplied by a large manufacturer is optimized to its own chip, and there is a limitation to its application as it is developed privately.

Especially, there is an inconvenience of developing different compilers depending on the chip type and AI application. There has been a limitation on intercompatibility and extensibility into new areas.

This development will make it possible to shorten the time for developing applications and their optimization. It is also related to reducing the cost of chip production and sales. It is compatible with NPU processors as well as CPU and GPU.

4)A tool of translating human-entered programming language into a machine-understandable language.

5)A program which helps a user's computer usage, as a terminology in the same level of application software. System software is to be provided by the manufacturer of computer hardware. The OS and compiler are typical examples, and it supports running and developing applications.

Its difference becomes even more significant when it supports more types of AI applications and chips. It was necessary to develop as many compilers as ‘deep learning platform type and chip type’ in the past, but it became possible to replace the compilers with one highly versatile nest compiler now.

While releasing the Nest Compiler as an open-source, ETRI also revealed a reference model where the Nest Compiler was applied to an AI chip developed by ETRI internally to revitalize the related industry ecosystem. This is the first time when both software and hardware for AI chip development have been disclosed.

The research team announced that this disclosure is significant in that Nest Compiler can play a pivotal role in the current AI chip ecosystem where development is fragmented.

ETRI also applied Nest Compiler to the high-performance AI chips which were internally developed by a domestic AI chip startup. ETRI plans to expand the scope of supporting deep learning compilers by collaborating with related companies, and it is also promoting the commercialization of AI chip application services through specialized software companies. Moreover, ETRI plans to contribute to creating new services by improving the performance and convenience required for developing AI application services.

Taeho Kim, Assistant Vice President of AI SoC Research Division, said, “The release of the standard deep learning compiler open source is to revitalize the domestic AI chip ecosystem. Technology cooperation is underway to apply the technology to various AI chip companies.”

This technology was developed through the “Neuromorphic Computing SW Platform Technology Development for AI System” by the Ministry of Science and ICT.

Jay Min Lee, Senior Researcher,

AI Processor/SW Research Section

(+82-42-860-1742, leejaymin@etri.re.kr)