VOL.56

September 2019

Special — Value of New Means of Transportation Brought by Autonomous Driving

Value of New Means of Transportation Brought by Autonomous Driving

Self-driving cars are no longer the imaginary vehicles found only in SF movies. Now, they are taking shape in our reality with the advancement of ICT. Self-driving cars identify the surrounding environment in real time and make their own decisions. This means that autonomous driving not only involves automotive innovation, but also a wide range of changes in terms of industry, traffic systems and rules, and smart cities. So, how can self-driving cars perceive varying environments and run through cities?

Demonstration of ETRI’s self-driving recognition technology (camera, Lidar sensor) in Cheonggye Plaza, Seoul

Autonomous driving perceives the world

Autonomous driving has been drawing much attention both in Korea and around the world. Replacing human drivers, the self-driving SW systems collect all relevant data related to driving and process the data for operation. Self-driving cars consist of the SW system that processes sensor input and data and the operating system to ensure convenient and safe mobility service from the point of departure to a certain destination. Here, safe mobility service means that self-driving cars have the ability of basic recognition of objects on the road and, at the same time, can accurately understand whether any object is an obstacle or a pedestrian. Autonomous driving can ensure safe driving only when the cars can control themselves based on an accurate recognition of the surrounding environment including nearby obstacles, and people’s movements and intention. Furthermore, the routes from the point of departure to destination, the current position of the car, and a precise map to identify lanes and intersections represent the essential elements of autonomous driving.

Self-driving cars are classified into six classes on the basis of the level of automation and the systems that respond to errors. Class 0 means that human drivers control everything without automated functions, and Classes 1 and 2 enable the driving assistant system to take responsibility for some of the observation and operation functions. In Class 3, the cars identify the surrounding environment and require the drivers to engage in specific circumstances. Most commercialized self-driving cars offer the Class 2 autonomous driving system. Class 4 cars do not require the drivers’ engagement or monitoring on the road even when they run through downtown, and Class 5 represents the level of complete automation where the drivers do not need to engage in all circumstances including on country roads.

At the current R&D stage, drivers should sit behind the wheel to respond to any errors in the autonomous driving SW system. Uber and Waymo, which are providing self-driving taxi services, are training the operators to monitor the system at all times in case of contingencies because accidents may occur when the operators ignore the system’s warnings or fail to respond to intended attacks. In addition, to develop self-driving cars that can work at a safer level, those self-driving technology development companies are conducting research to integrate 5G technology to work with artificial intelligence and infrastructure to forecast various situations based on learning.

Sensors: eyes and ears of self-driving cars

Most self-driving cars use cameras and Radar and Lidar sensors at the same time. The current commercial self-driving cars apply both sensor cameras and Radar sensors for their operation. Which situations require those sensors as eyes and ears of self-driving cars?

First, self-driving cars can recognize road signs in two stages: they recognize the signs with their cameras and then classify them. As cameras can read lanes and signs, they also perceive complex environments on the road such as the objects, lanes, traffic lights and pedestrians in front of the car. With AI, autonomous driving becomes more sophisticated as the existing objects and road environment can be classified based on big data and become predictable. The camera recognition technology, based on machine learning, allows the navigation system to recognize various data in real time, including how the road slopes and bends and road signs.

The sensors that enable self-driving cars’ overall recognition are Lidar and Radar. These two types of sensors measure the distance to any object. Lidar emits a laser to measure the focused image and travel time and identify the location of a specific point, which provides information on distance, velocity, and direction. Radar measures the time spent for electromagnetic wages to return and detects the distance to and velocity of nearby objects.

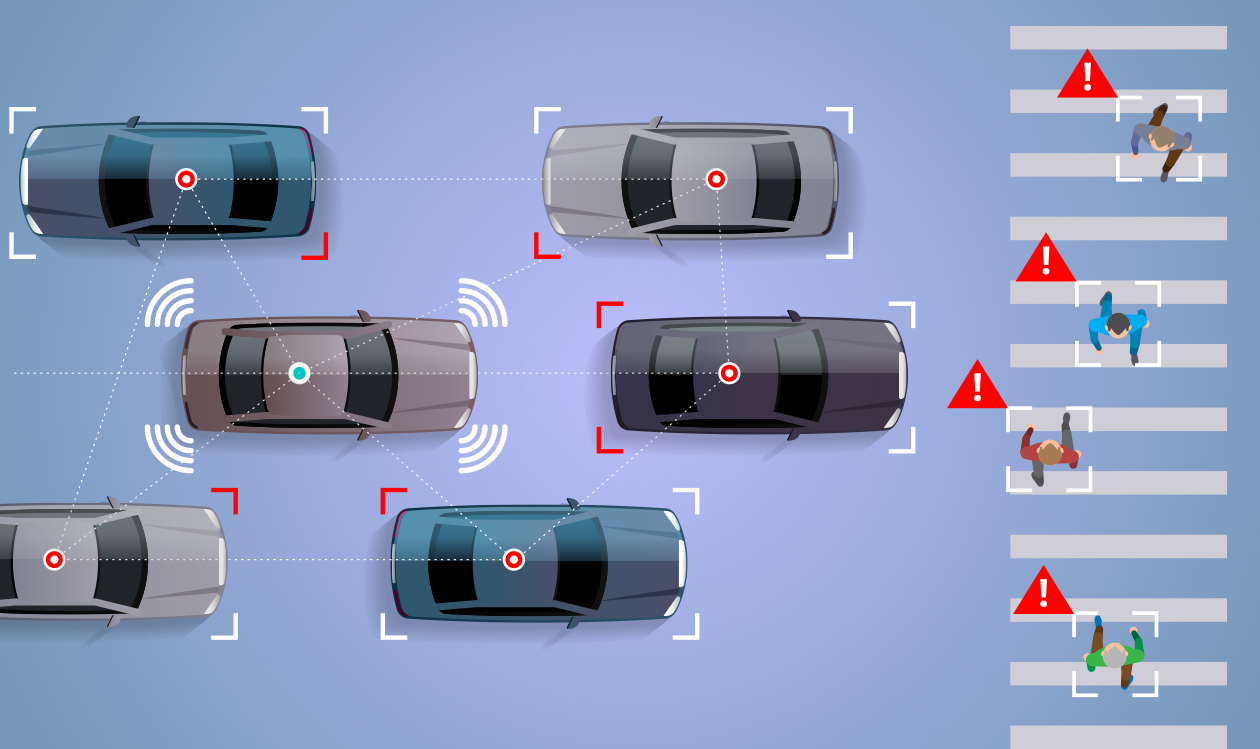

Sensors directly identify the environment surrounding the self-driving cars. Despite the sophistication of the cameras, and of the Radar and Lidar, it is not easy for the cars to avoid accidents that may occur when nearby cars suddenly change lanes or when visibility is low. This is why V2X (Vehicle to Everything) technology, which allows continuous exchange of information among cars, infrastructure and people, needs to be developed. V2X is a technology of exchanging information with things such as other vehicles, mobile devices, pedestrians, and infrastructure through wired and wireless networks. V2X will become a key technology for completely automated transportation infrastructure and a foundational technology for future cars that can communicate with one another.

V2X(Vehicle to Everything communication)

Technology to enable self-driving cars to communicate with various elements on roads

Demonstration of a self-driving car within ETRI

Safe mobility and reliable companion

ETRI has recently loaded image sensors and Lidar sensors in an electric car developed by a Korean SME and developed core technology for self-driving cars of Classes 3-4 equipped with AI software developed by ETRI. The researchers successfully demonstrated calling a car with a smart phone application, sitting in the car and moving to the destination through autonomous driving. “The key to self-driving cars is that they recognize the surrounding road situation based on sensor data and precise maps and update the map precisely by using what they recognized,” explains Associate Vice President Jeong-dan Choi of the Intelligent Robotics Research Division. “Our error range is within 10cm, which is the world’s smallest.” To realize the autonomous driving technology with small electric cars with short power supply, the research team optimized not only the algorithms for car control and circumstantial judgment, but also the software of the AI algorithms for autonomous driving, such as those related to the location of cars, traffic lights, obstacles, pedestrians, and recognition of car models. Compared to its competitors, this new SW technology is superior as it focuses on autonomous driving services.

Various ambitious research endeavors should certainly be made, but it is also necessary to improve road environments. On the general roads where self-driving cars run with other ordinary cars driven by human drivers, negotiations should be made to determine which car should go first. The current traffic rights and rules applicable to human drivers should also be revised in step with technology development. It is also essential to digitize road infrastructure.

Self-driving cars will free drivers and pedestrians from manual driving and provide independent and safe mobility. The researchers expect that the autonomous driving technology will not remain a simple means of transportation and instead take root as a new convergent industry that creates value during mobility. They added that they are planning to focus on developing self-driving cars for senior citizens and physically disabled persons. To this end, the research team is committed to developing self-driving cars suitable for an aging society and for inaccessible areas and to using cars for demand-responsive unmanned transportation, distribution shuttles, and regular unmanned mini-bus services. To ensure such reliable companions, experts in various fields have already launched various R&D projects.